Reinforcement Learning from Human Feedback: When the Math Ain't Enough

Hundreds of brightest people at ICML '23 were in the room at the RLHF tutorial. Speaker asked who wanted to annotate the data. Only five, maybe ten people, raised their hands. That surprised no one.

I was very happy to travel to ICML 2023 in Hawaii to give a tutorial on Reinforcement Learning for Human Feedback (RLHF) together with

from Hugging Face. We recently published the tutorial slides, and in this article, I will provide a brief transcript of my part focused on data labeling aspects of RLHF.This post is also available in other languages: Chinese, Russian.

Introduction

Who wants to do reinforcement learning from human feedback?

The entire room raises their hands.

Great! And who wants to annotate the data to obtain human feedback?

Only five, maybe ten hands remain.

I believe it is very important to talk about data labeling when it is not what everyone wants to do. As you surely know, RLHF stands for reinforcement learning from human feedback, and both aspects are important. It is not enough to implement the distributed training routines for our large language models (LLMs) if our underlying datasets do not show good examples of what we want from the model.

We want the LLMs to be helpful, harmless, and honest at the same time. But computers do not know what it means yet, so we need human feedback to align the models with our preferences, evaluate their outputs, and avoid tedious reward engineering in RL.

What to Annotate?

Deep learning allows us to train the models, but there is so much interesting about the optimal way of training LLMs. There are diverging opinions about their capabilities and the size of effort needed to make them efficient. There are two examples. Funny enough, both came from the same organization, Meta:

In a recent pre-print, Zhou et al. (2023) proposed the Superficial Alignment Hypothesis. They said the models already know everything, and you only need to show them the desired input and output formats. So, we don’t need complex annotation schemes; only a small amount of instructions is required for success. It would be big if true; let’s see if this manuscript gets accepted.

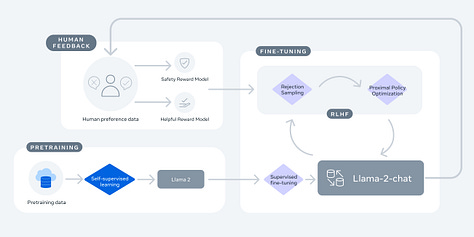

In contrast, in the Llama 2 paper, Touvron et al. (2023) claimed that “superior writing abilities of LLMs are fundamentally driven by RLHF,” implying a fair amount of necessary annotation.

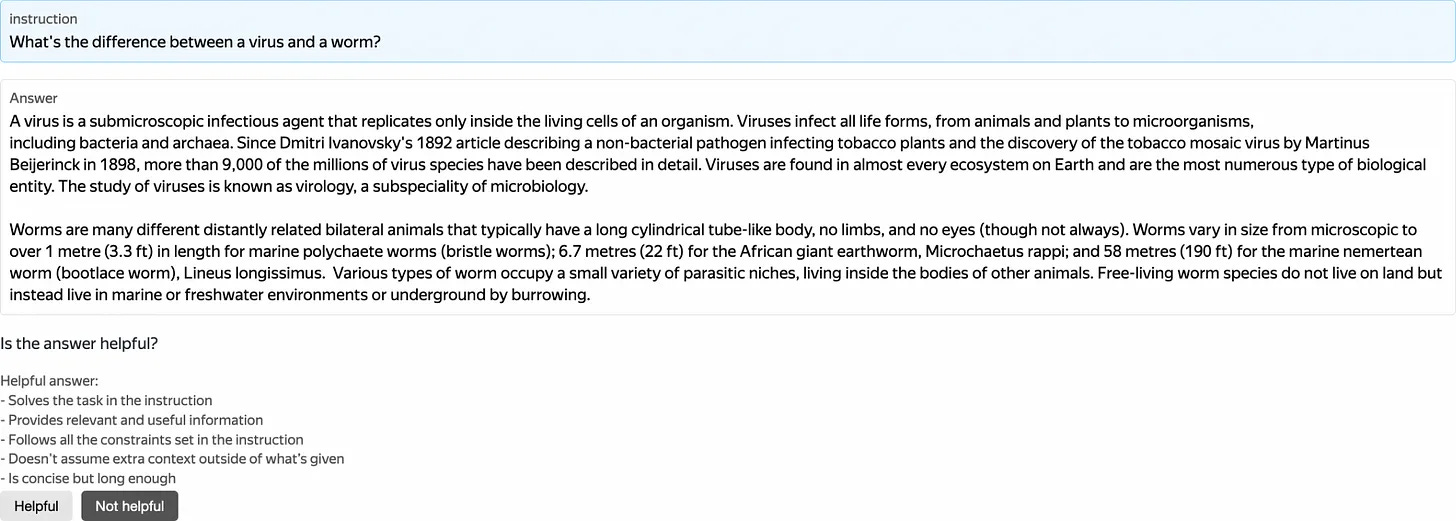

Now look at three more examples and try noticing some patterns. First, the now-famous OpenAI’s InstructGPT diagram (Ouyang et al., 2022), then a similar diagram from Anthropic’s Claude (Bai et al., 2022), and last but not least the one from Meta’s Llama 2 (Touvron et al., 2023).

There are three common steps in these three diagrams:

We initially train the model to perform next word prediction on a huge collection of documents called a text corpus. No annotation is needed.

Then, we perform supervised fine-tuning (SFT) on a smaller collection of carefully-written instructions and responses.

Finally, we refine the behaviour of our model using human preferences (reinforcement learning process with reward modeling).

Even though each step is somewhat intuitive, the devil is in the details. How much annotation is needed? How many iterations? Which texts? What expertise is required? It’s all about making design decisions.

We need human insights on texts and scores, fast, correct, and at scale. For supervised fine-tuning, we can use synthetic, crawled, or labeled data. For reward modeling, we need to get human preferences.

A Quick Tour to the Basics of Data Labeling

Before we proceed to the data-centric aspects of RLHF, I need to make a short stop on the basics of data labeling.

Who annotates the data? There are many options:

well-trained annotators (experts)

crowds of non-expert annotators (crowdsourcing)

pre-trained machine learning models

any combination of the above three

Regardless of who annotates the data, we must design instructions and means for quality control.

Data Labeling with Crowdsourcing

I will focus on crowdsourcing as it is the most scalable approach, and it generalizes well to other kinds of annotation. I have studied crowdsourcing since 2012 and worked at one of the biggest data labeling platforms for four years. Based on my experience, I strongly believe that the core challenge in data labeling is to make the annotators understand the task the same way as you do.

Usually, annotation is performed on a specific platform. There are on-premise platforms like Label Studio, CVAT, Prodigy, and hosted platforms like Mechanical Turk, Scale, Surge, etc. The good thing about on-premise platforms is that you can deploy them on your infrastructure and adjust them for your needs. The good thing about hosted platforms is that they provide annotators and payment options, which is extremely important in labeling—someone has to do it.

Core Components of Data Labeling

There are six core components of a successful data labeling project: decomposition, instruction, task interface, quality control methods, annotation reliability, and a trade-off between speed and cost. The most important are task decomposition and instruction.

Task decomposition is splitting the original difficult task into a pipeline of smaller, simpler subtasks. The subtasks are given to the crowd of annotators so that every subtask receives responses from two or more different annotators.

The best thing about task decomposition is the possibility of solving extremely tough problems. For instance, what if we want to annotate an image dataset with bounding boxes? We can use a sequence of two subtasks: first, we ask to draw a bounding box, and then, we ask the annotators to indicate if it was drawn correctly.

A good task instruction contains the goal of the annotation task, annotator interface overview, required action items, examples of good and bad answers, intuition for resolving rare and non-obvious cases, and reference materials. It is very hard to write a good instruction on the first try; check out No Vehicles in the Park as a good illustration of this problem.

Usually, if the decomposition is done well, instruction and interface become simple, annotators perform tasks with better quality, standard quality control techniques work reliably, and it is easy to control and optimize the pricing.

Now let’s return to our main agenda.

Supervised Fine-Tuning

During the initial model training and supervised fine-tuning, inputs are texts, and the model learns to predict the next word. Texts are usually taken from publicly-available corpora: Common Crawl, RefinedWeb (Penedo et al., 2023), The Pile (Gao et al., 2020), etc. How do we obtain good instruction prompts and responses?

Some organizations found meaningful kinds of prompts. Let’s look at OpenAI’s InstructGPT again (Ouyang et al., 2022): Generation (45.6%), Open QA (12.4%), Brainstorming (11.2%), Chat (8.4%), Rewrite (6.6%), Summarization (4.2%), Closed QA (2.6%), Classification (3.5%), Other (3.5%), and Extract (1.9%). These kinds and numbers are non-arbitrary! The OpenAI team analyzed and annotated their GPT-3 user interaction logs and built the SFT dataset following this real-world usage distribution.

Besides the kinds of prompts, the main question is how much data we need. I analyzed the recent papers and obtained the following numbers:

Llama 2 (Touvron et al., 2023): 28K

InstructGPT (Ouyang et al., 2022): 15K

Alpaca (Taori et al., 2023): 52K

Vicuna (Chiang et al., 2023): 70K

Dolly (Conover et al., 2023): 15K

OpenAssistant (Köpf et al., 2023): 10K+

Claude (Bai et al., 2022): 137K + 369K

WizardLM (Xu et al., 2023): 624K

LIMA (Zhou et al., 2023): 1K

The dataset size does not matter, you need really good prompts and responses!

How to Get the Texts?

How do we get these texts? There are three options:

Model-derived datasets, which are based on user interaction with the available models. Unfortunately, some vendors prohibit training competing models on these datasets, but some people do it for research purposes anyway.

Web-based datasets employ Web data from communities like Reddit, Quora, Stack Exchange, and the link, but the licenses are often unclear, and additional data cleansing is required.

Crowdsourced datasets that imply expert or crowd-based annotation, which is the safest, but the most labor-intensive option.

Here is the summary of the five popular publicly available datasets with prompts and responses.

Dolly is an in-house expert-annotated dataset of 15K items annotated by the Databricks team.

Alpaca is a model-derived dataset obtained by transforming 175 self-instruct seed tasks into 52K items using the OpenAI’s GPT-3.5 model.

WizardLM is a model-derived dataset that used a set of rules to complicate and re-arrange the Alpaca dataset to obtain a larger dataset of 624K items.

ShareGPT is a browser plugin that downloads the ChatGPT conversations and stores them on a centralized server. The data license is unclear, but a well-performing Vicuna model was trained on a model-derived subset of 70K items.

OpenAssistant is an open-source crowdsourced multilingual dataset with prompts and instructions, a model, and a reusable annotation framework. It comes from LAION, the organization behind a popular Stable Diffusion model. But we need to ensure that volunteered interactions are similar to the ones used to train state-of-the-art LLMs.

Whenever the model-derived approach is used, one can replace the upstream model with human labeling.

How to Annotate the Texts?

The initial prompts should be written by experts or taken from trusted Web corpora as they are an essential part of supervised fine-tuning data. However, we can safely annotate the responses to these prompts.

One of my favourite examples of crowdsourcing was published in a paper by Bernstein et al. (2010) and is called Soylent. A group of researchers created a plugin for a popular word processor that performed text summarization very efficiently without any machine learning methods using crowdsourcing instead. They proposed a general-purpose annotation pattern called Find-Fix-Verify in which the original text summarization task was decomposed into three subtasks:

Find. Given a text sample, select a problematic span.

Fix. Given the text sample and the problematic span, write the better one.

Verify. Classify whether the written span is better. (Yes/No)

We can adopt a similar approach to compose responses. At the first step, we ask the annotators to write the response to the given prompt. And at the second step, we ask them to verify the goodness of that response. Both must have identical instructions so the composers and verifiers have the same guidelines.

As a result, instead of solving a very difficult problem of writing responses, we are solving a much simpler problem of aggregating binary labels. And this problem is more or less solved by the research community (Zheng et al., 2017). For smaller datasets, one should choose the majority vote, and for larger datasets with more than 1K responses, one should use the Dawid-Skene (1979) probabilistic aggregation model.

Data annotation for supervised fine-tuning is vital yet uneasy to do correctly. It’s all about design decisions. Where do you get initial prompts? Are you going to use synthetic data? Will you involve expert annotators? What prompts do you need, and how do you aggregate the data? I strongly recommend using pre-annotated golden tasks for the Verify step to evaluate the annotators (golden tasks are still the most efficient quality control method in crowdsourcing). And be careful about the licenses.

Human Preferences

After the initial language model was trained and fine-tuned, we polish it to be helpful, harmless, and honest. Unlike the supervised fine-tuning part, human preferences have a much simpler task design. As RLHF requires a huge amount of labels and we cannot trivially transform human opinions into a reward function, we will be approximating human scores with a reward model.

Given the prompt and the response, the reward model estimates how a human would rate it. So we need to design the human labeling task that depends on our LLM training details. Are we generating only two responses per prompt? Good, then we can stick to the simple binary classification task design. What if we, like InstructGPT, have more? In this case, the situation becomes less trivial, and we have to perform ranking aggregation.

Ranking Annotation

What are the possible options for ranking?

Pointwise. Given a prompt and responses, provide a single numerical score for each response. Unfortunately, different annotators have subjective scales, which complicates the further use of the data. Some communities, like machine translation, perform score standardization during post-processing (Adelani et al., 2022), but still, it is not the most reliable way to obtain good rankings.

Listwise. Given a prompt and responses, order them from best to worst (or vice versa). Although it feels intuitive from the annotation perspective, it is not clear how to integrate this into the training procedure.

Pairwise. Given a prompt and responses, sample and annotate pairs of responses, and transform the annotated pairs into individual response scores using the algorithms like Bradley-Terry (1952).

Most studies use pairwise comparisons. You might be interested in publicly-released annotator instructions for human preferences from OpenAI and Hugging Face.

How Much to Annotate?

Touvron et al. (2023) already performed an excellent analysis of the available human preference datasets; see Table 6:

Anthropic Helpful: 122K

Anthropic Harmless: 44K

OpenAI Summarize: 177K

OpenAI WebGPT: 20K

Stack Exchange: 1038K

Stanford SHP: 75K

Synthetic GPT-J: 33K

As the preferences are subjective, preparation of golden tasks becomes harder. I recommend using synthetic data from a worse-performing model, a previous snapshot of our model, obvious responses, or another dataset with a similar topic. We successfully used a smaller model in the past; see Pavlichenko & Ustalov (2023).

Reward Model Structure

Even though the pairwise comparisons are not very difficult to implement, we have another design decision to be made: what granularity of feedback are we incorporating into our reward model? If we compare only pairs of responses, what makes one response better than the other? Being only factually correct is not enough since the responses might be offensive, rude, or malicious. So we might be willing to implement multi-dimensional quality criteria like helpfulness, harmlessness, and honesty (Bai et al., 2022). But will you train one reward model for everything or blend three models, each corresponding to the dedicated criterion?

Reward modeling allows the LLM to get its personality and maintain a trade-off between helpfulness, harmlessness, and honesty. However, as usual, we have to make difficult design decisions. What is the number of responses per prompt? What is our sampling approach? How do we structure our reward model and our annotation task design? If you proceed with the annotation, use synthetic data for quality control. For longer texts, incentivize the annotator’s expertise. Note that a similar annotation approach can be used for the red-teaming post-processing step after the training is finished.

Conclusion

Here are the three key takeaways that the attendees should remember from this tutorial:

The scale of annotation is 10K+ prompts for supervised fine-tuning and 100K+ for human preferences.

Focus on smaller datasets of higher quality rather than on larger uncontrolled datasets since data labeling is not easy.

The annotators must understand the task the same way as you do, so use synthetic data and cross-checks for quality control during the annotation.

Currently, I see three topics for future work. First, as our current estimates of the needed data are based on trial and error, what are the theoretical requirements? Second, what are the more important instruction kinds and how to find them? Third, what is the optimal pipeline and task design?

I mentioned several aggregation techniques and quality control methods in crowdsourcing. My team created an open-source Python library called Crowd-Kit. It efficiently implements all of them, provides data quality and inter-annotator agreement metrics, and dataset loaders for faster prototyping. I highly recommend using it when you are working with crowdsourced datasets.