Gemma Gemma Gemma

From licensing to performance, let's look at the new open-source large language model released by Google DeepMind.

When a corporate research unit publishes a technical report, one has to skim not just through the academic language but also through the corporate marketing speak. So, I had a good reason to read the recent DeepMind report featuring Gemma, a family of open-source Transformer-based large language models (LLMs) trained similarly to the bigger Gemini models announced last year. In this post, I summarize what I noticed.

Licensing

We’ve got used to open-source software and data licenses. Today, pre-trained model licenses seem to be lagging at maintaining the balance between protecting the owners and not stifling innovation in the fast-paced area of research. Possibly, due to the 700M MAU restriction in the Llama 2 license, the Gemma authors could not run a round of experiments with it, which is a clear lose-lose situation for open-source models, in my opinion.

Gemma’s license has its quirks as it requires the users to always use the latest version of Gemma (§ 4.1). It sounds now like a day one patch for video games, but this time for the sake of the safety of the AI-generated content.

Experiments

Google’s release of Gemini in late 2023 received controversy due to the unfair juxtaposition of the 32-sample chain of thought prompting approach versus 4-shot learning during the comparison with the state-of-the-art models. In my opinion, Gemma’s report feels fairer, but not without raised eyebrows.

Most comparisons were only performed against comparatively sized Mistral and Llama 2 models with 2B and 7B parameters that demonstrate similar performance on static benchmarks. I would take these results as a sanity check as these tasks might be already well-represented in the training corpora. There were no specific details about the training data provided. It is always important to evaluate out-of-distribution tasks, but without full transparency about the training data, it is not easily possible.

I found the reported human evaluation dubious at best. The authors asked a team of in-house annotators to evaluate 1K prompts that had been fed to the instruction-tuned 7B Mistral model and the two Gemma models on three topics. No distribution of topics was shown, no descriptive statistics were presented, no error analysis was performed, and it was unclear how the data were gathered and filtered. I believe in the authors’ best intentions, but this description of an essential evaluation step was incomplete and did not allow objective third-party validation. A proper example of such an evaluation is available in our visual question answering benchmark description, and I wish to see more efforts like this.

I liked the memorization evaluations, and I think it’s a great way to study the exact replication of the training data and personally identifiable information leaks. But remember that all offline benchmarks are meant to exclude the bad models, and they do not necessarily select the good models. The best model should be chosen after a properly-configured online experiment on real users.

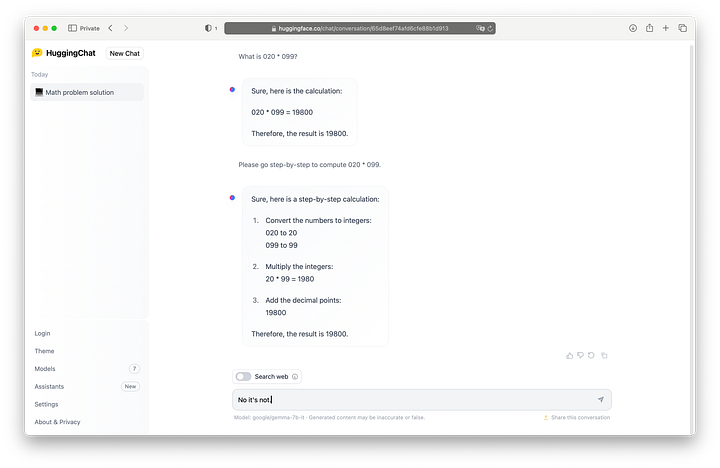

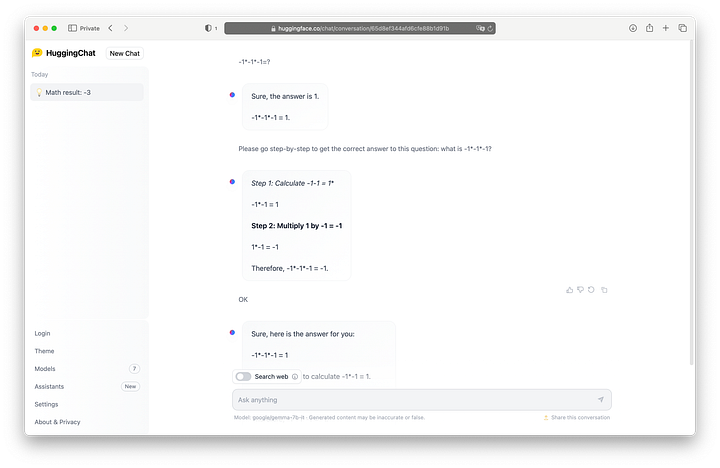

As LLMs are notoriously hard to evaluate, the best way to evaluate one is still to play with the model for some time. On the Internet, I noticed two examples indicating something odd with the current version of Gemma served on HuggingChat: the content gets repeated sometimes, and the model seems to operate with the tokens incorrectly. However, I believe that the community might get it fixed.

Summary

According to the reported benchmarks, Gemma seems to be on par with the current open-source state-of-the-art of comparable size. Yet, the report did not properly assess its capabilities outside the popular in-distribution tasks. It’s fantastic to see more open-source models, and Google did a great job towards being a part of open LLM ecosystem. Besides the word-of-mouth effort, Google expects to sell services for model safety and hosting, some of which were marketed directly in the report. However, the stock market seemed to be happy about it.